Perle Systems

News

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Revolutionizing Energy with the Efficiency of Wireless | |||||||||||

|

Energy production and distribution sites are embracing wireless LANs and WANs for data collection, regulatory compliance, and efficient infrastructure management. These cellular networks are being used to reduce operational costs and improve system reliability by:

|

|||||||||||

|

|||||||||||

|

It's not just about operational efficiency; it's about forging pathways to sustainable and compliant energy management practices by making smarter, data-driven decisions. Perle IRG Cellular Routers can accelerate the deployment of a high-performance wireless network by providing secure connectivity, location-based services, and remote management. Dive into our comprehensive analysis to explore how energy enterprises leverage cellular technology to make their operations leaner, safer, more reliable, and more sustainable. |

|||||||||||

|

||||||||||||||||||||||||||||||||||||||||||||||||||||

IOLAN SCG LWM Secure Console Server recognized in 2022 Network Computing Awards

PRINCETON, N.J. (Aug 4, 2022):The public and industry judges for the 2022 Network Computing Awards have voted that the Perle IOLAN SCG LWM Secure Console Server is the ‘Network Management Product of the Year’ winner. This award recognizes an innovative product and company that has strategically added value to the performance of an organization’s network.

We are thrilled to win this prestigious award and thank everyone who has voted for us. Being recognized alongside some of the most esteemed companies in the world is a testament to the quality of our products.

The Perle IOLAN SCG LWM Console Server is a hardware solution that provides LTE, Wi-Fi, or modem Out-of-Band (OOB) access to securely reboot IT-equipment that has crashed or been powered down during network outages. This is the only way to gain access to critical equipment when the network is down, a device is turned off, in sleep mode, hibernating, or otherwise inaccessible.

The core idea is to preserve 24/7 network uptime by establishing secure direct access to the USB, RS232, or Ethernet console management port of critical IT assets such as routers, switches, firewalls, servers, power, storage, and telecom appliances. Disruption and downtime are minimized by providing better visibility of the physical environment and the physical status of equipment. This ensures business continuity through improved uptime and efficiencies.

IOLAN SCG LWM Console Servers provide the most robust OOBM solution to keep Data Centers secure with functionality that includes full routing (RIP, OSPF, and BGP) capabilities, Zero Touch Provisioning (ZTP), two-factor authentication (2FA), integrated firewall, advanced failover to multiple networks, AAA security features, RADIUS, TACACS+, & LDAP authentication, and leading data encryption tools.

Full List of Winners can be found here: https://networkcomputingawards.co.uk/

About Network Computing: – https://networkcomputing.co.uk/

Network Computing is the UK's longest-running publication dedicated to network management. It covers the technological, financial, regulation and compliance, and the human issues faced by organizations across all industry sectors as they try to operate secure, effective networks. Through publishing news, business and strategy articles, user profiles, independent product reviews, interviews, and comments, the magazine not only helps readers make better-informed purchasing decisions but also helps them to make the best use of the resources they already have.

Zero Trust is a popular concept in cybersecurity. It initially referred to enterprise security teams who built processes that removed all trust from end-users.

This is a valid approach. I, for example, like to run companies that do not mind if employees click on bad links. We understand it will be impossible to avoid, so we prepare for the inevitable — and have zero trust that there will be perfect behavior from our employees.

But when the “trust balloon” is squeezed to remove trust from end-users, then where does it go? There are two possible recipients: enterprise security organizations and the software that enterprises purchase.

Given that security organizations are ubiquitously understaffed and overwhelmed, market forces have stepped in to squeeze the trust balloon once more. Trust has been eliminated from the end-user and delegated by understaffed enterprise security organizations to big service providers and software companies. We trust Big Tech — Google, Facebook, Amazon, Microsoft, Slack, and Zoom — to be the stewards of our most critical data. We have given our trust to an industry built upon speed and risk acceptance that has no liability other than market forces.

Where shifting trust models go wrong

While it may seem a reasonable solution to trust Big Tech with your data, you need to first be clear about one thing — these companies are primarily interested in growing their market share, not security. For example, Zappos is okay with some level of fraud, Microsoft Teams is okay with occasional remote code execution, and SolarWinds was more profitable if they did not keep tabs on their software build processes. In these cases. data security and privacy were decoupled from profitability and valuation. Low quality and high risk were acceptable outcomes in pursuit of high valuations and executive wealth creation.

But the Department of Justice cannot operate in a similar risk model. It is not okay for Russia to have unfettered access to DOJ email and the Office of Personnel Management cannot be okay with the occasional breach giving China’s intelligence agency access to the personnel files of 22 million government employees. It’s obvious that our national security interests are not supported by the use of vulnerable software. And yet, the national security apparatus is reliant upon technology that prioritizes profitability and valuation above all else.

The effect of market forces on security

Market forces have dictated that a move fast and break things mentality is the most reliable way to achieve the highest possible market share and valuation. For a tech CEO, the longest path to billionaire status has been developing secure, well-engineered products. Our shifting trust models have placed the responsibility for data security at the feet of the decision-makers with the least incentive to build secure software.

I don’t mean to suggest that the billionaire CEOs of the world’s largest software companies are naturally inclined to abuse privacy and data — rather, they are profit-motivated geniuses who are naturally inclined to compete and win within the regulatory swim lanes given to them.

The importance of liability

The Clinton administration gave the U.S. technology industry a get out of jail free card with the Telecommunications Act of 1996. As a result, Silicon Valley dominated — innovation grew and stayed in the U.S. Moving fast and breaking things was the right approach. No certifications, no permitting, no consequence for security or privacy issues. Now is the time to examine liability and put CFOs and CEOs on the hook for dodgy engineering.

In 2013, HTC shipped 18 million vulnerable mobile devices and was fined by the Federal Trade Commission (FTC). In 2019, Google received a record $57M fine by the EU for privacy violations and in the same year, Facebook was hit with a record $5B fine for their privacy infractions. Just last December, Noah Phillips, a member of the FTC, testified to the Senate Committee on Commerce, Science, and Transportation that the FTC’s consumer privacy-enforcement actions against Facebook, TikTok, YouTube, Zoom, and other companies had already had a “greater impact than any others in the world.” This can be viewed by the layperson as progress and directionally correct.

The sad reality is that these fines are so inconsequential that they actually promote recklessness. Google generates almost $370 million per day from ads. A “record” $57M fine is not a speed bump — it is an invitation to hit the gas. More than ever, market forces are signaling to the technology industry that ignoring security is their most profitable strategy. Enter the conga line of security vulnerabilities and breaches in 2020.

This is just a sampling of the security incidents in the past year that were most emblematic of poorly engineered software that impacted large enterprises and national security:

There are talented security minds employed at most large software companies, but their functions are starved and underserved. They will remain so unless regulators become serious about enforcement. Fines should be increased to levels that have a material impact. Sticks can be motivating, but carrots work too. Balance sheets should be audited and investment in security engineering increased industry-wide. Software vulnerabilities and data breaches should trigger mandatory oversight and increases in security budgets.

Related: 5 Key Security Considerations For Securing the Remote Workforce

What can be done?

We should be requiring more from tech companies and creating regulatory frameworks that hold them liable for unacceptable product security. The question facing our industry is NOT whether the breach and response playbook used for building and selling video doorbells should be used by the Pentagon. We know the answer is no.

We just have to stop lowering our security standards in the name of convenience. The massive costs required to recover from the SolarWinds/Microsoft breach is not an acceptable burden for taxpayers to shoulder. We give all our trust to large technology providers — trillions of dollars of wealth are created in these software companies. It's now time for these companies to own their fair share of liability as well.

|

|

|

|

||||||||||||||

|

|

||||||||||||||

|

||||||||||||||||||||||||

|

||||||||||||||||||||||||

|

|

||||||||||||||||||||||||

Why Is Directory Listing Dangerous?

Directory listing is a web server function that displays the directory contents when there is no index file in a specific website directory. It is dangerous to leave this function turned on for the web server because it leads to information disclosure.

For example, when a user requests www.acunetix.com without specifying a file (such as index.html, index.php, or default.asp), the web server processes this request, returns the index file for that directory, and the browser displays the website. However, if the index file did not exist and if directory listing was turned on, the web server would return the contents of the directory instead.

Many webmasters follow security through obscurity. They assume that if there are no links to files in a directory, nobody can access them. This is not true. Many web vulnerability scanners such as Acunetix easily discover such directories and all files if directory listing is turned on. This means that black hat hackers can also find such files easily. This is why directory listing should never be turned on, especially in the case of dynamic websites and web applications, including WordPress sites.

Directory Browsing Without Directory Listing

Even if directory listing is disabled on a web server, attackers might discover and exploit web server vulnerabilities that let them perform directory browsing. For example, there was an old Apache Tomcat vulnerability, where improper handling of null bytes (%00) and backslash (\) made it prone to directory listing attacks.

Attackers might also discover directory indexes using cached or historical data contained in online databases. For example, Google’s cache database might contain historical data for a target, which previously had directory listing enabled. Such data allows the attacker to gain the information needed without having to exploit vulnerabilities.

Directory Listing Example

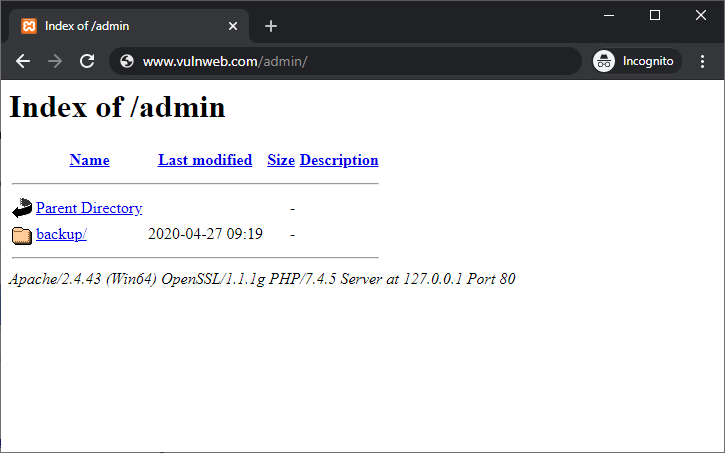

A user makes a website request to www.vulnweb.com/admin/. The response from the server includes the directory content of the directory admin, as seen in the below screenshot.

From the above directory listing, you can see that in the admin directory there is a sub-directory called backup, which might include enough information for an attacker to craft an attack.

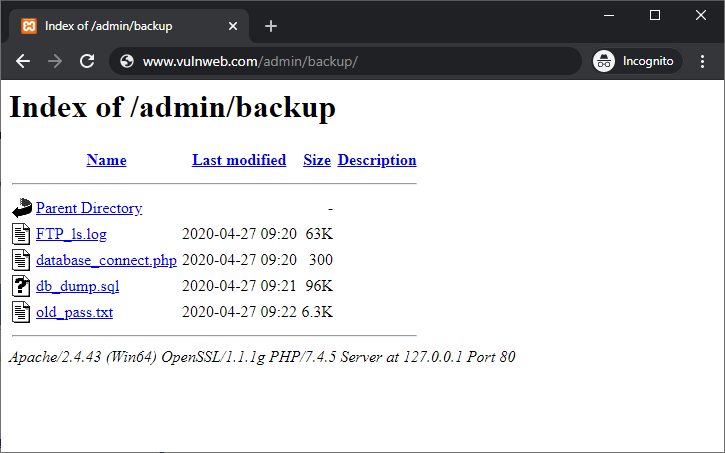

The attacker can display the whole list of files in the backup directory. This directory includes sensitive files such as password files, database files, FTP logs, and PHP scripts. It is obvious that this information was not intended for public view.

Misconfiguration of the web server has led to file list disclosure and the data is publicly available. Moreover, files like these, such as FTP logs, might contain other sensitive information such as usernames, IP addresses, and the complete directory structure of the web hosting operating system.

How to Disable Directory Listing

To disable directory listing, you must change your web server configuration. Here is how you can do it for the most popular web servers:

Apache Web Server

You can disable directory listing by setting the Options directive in the Apache httpd.conf file by adding the following line:

<Directory /your/website/directory>Options -Indexes</Directory>

You can also add this directive in your .htaccess files but make sure to turn off directory listing for your entire site, not just for selected directories.

nginx

Directory indexing is disabled by default in nginx so you do not need to configure anything.

However, if it was turned on before, you can turn it off by opening the nginx.conf configuration file and changing autoindex on to autoindex off.